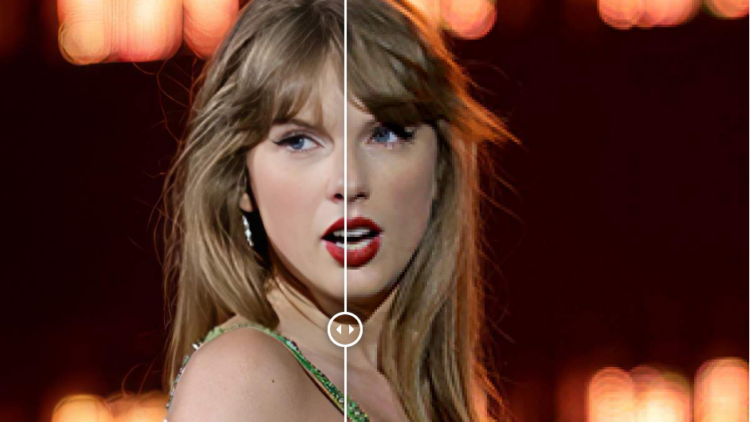

In this video, I explore various methods for colorizing black and white photos, including both free and paid options. I emphasize the use of DDColor models, a free and effective tool.

I acknowledge that AI colorization is not always perfect, but it can produce good results. I discuss the importance of training data, which helps the AI model understand how different parts of a photo should be colored.

Here is a comparison of the methods discussed in the video:

- Paid online services: I briefly review three paid services: Palette FM, Deep AI, and Hot Pot AI. Palette FM seems to be the most promising option, but I recommend exploring free alternatives first.

- Photoshop Colorize: This built-in Photoshop feature can be useful, but it may require additional adjustments in Photoshop to achieve a good result.

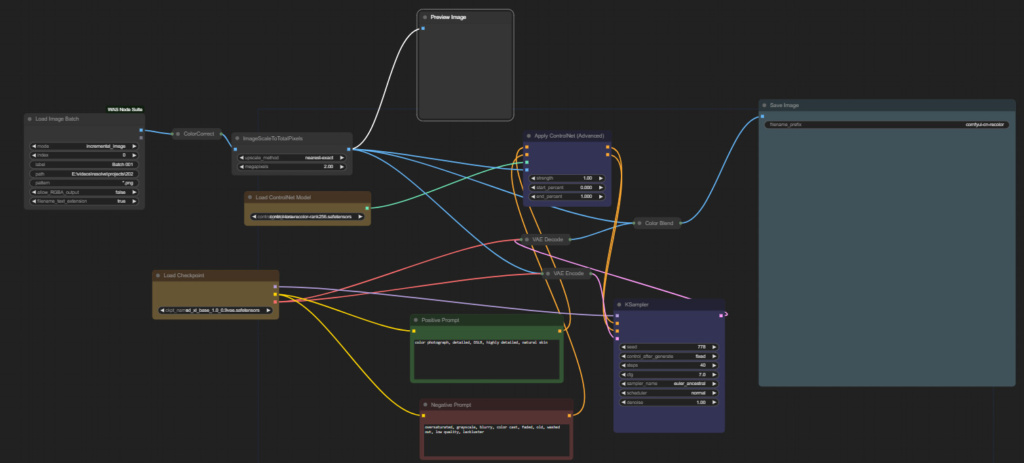

- Automatic 1111 with Control Net and Stability Diffusion: This method offers good quality results, but it is time-consuming.

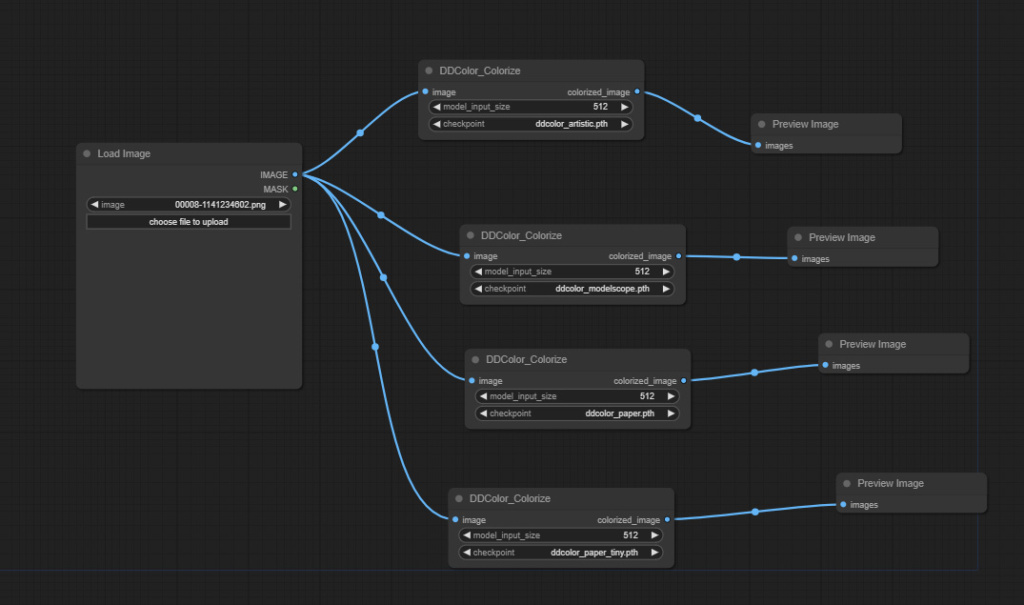

- DDColor models: These free models are easy to use and produce good results in seconds. They are available in the Comfy UI software.

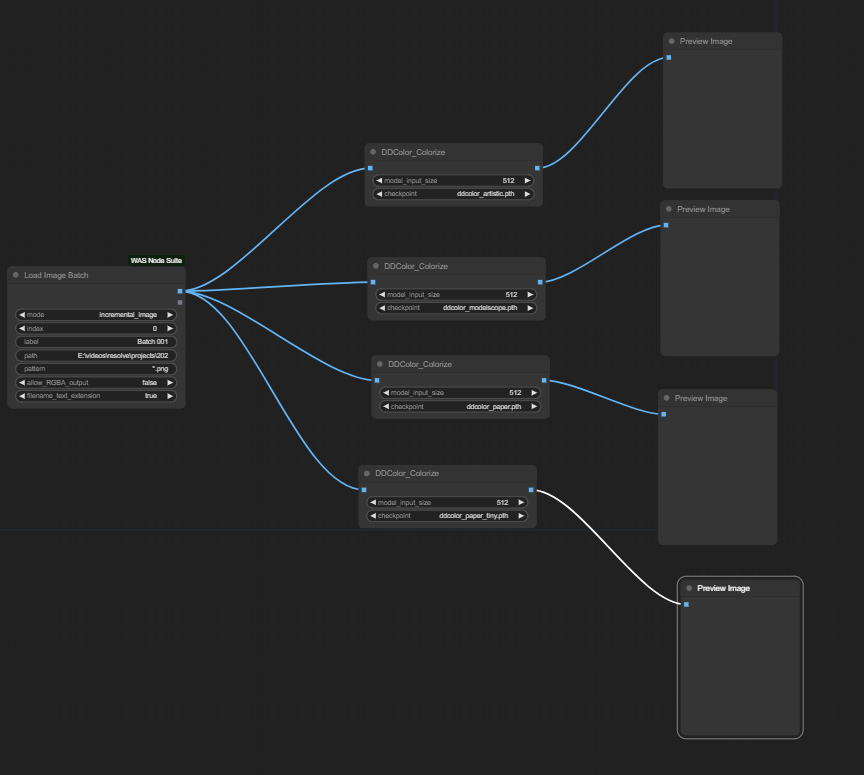

Overall, I recommend trying a variety of methods to find the one that works best for a particular photo. DDColor models are a good option to start with since they are free, easy to use, and very fast. This is what I do anyway, combine a variety of methods depending on what I am after and gives a result that I am happy with. In the end, I added three Comfy UI workflows, two with DDColor nodes and one that uses Controlnet Recolor Model and a Stable Diffusion 1.5 checkpoint.

Comfy UI workflows

There are 3 workflows as json files store that you can download in this 7z file.

1. The first is the one displayed in the video, using 4 times the same node each node with the one of four different DDColor models.

2. The same as the first, but as a source it uses a node that accepts paths in order to use it with a folder to loop through the files there. Useful for batch recoloring, eg for video frames. However, the results are inconsistent since there is no text prompt to have some control in the output.

3. A method that I haven’t used in this video, but I use it many times, especially on old photos that use controller recolor models and SD1.5 and SDXL checkpoints. The workflow that is in the 7z file uses the SDXL checkpoint with vae, but in order to use an SD1.5 one you will need an appropriate Controlnet recolor model.

Info about the ControlNet recolor models1 that I have also in the description of the video.